Prediction intervals provide a measure of uncertainty for predictions on individual observations. This post…

- builds up a motivating example

- describes factors that influence prediction intervals

- shows examples of how to build and review prediction intervals

This is the first of three posts on prediction intervals (Part 2 employs simulation techniques and Part 3 quantile regression). I use the R programming language and the tidyverse + tidymodels suite of packages to create all models and figures.

library(tidyverse)

library(tidymodels)

library(AmesHousing)

library(gt)

# function copied from here:

# https://github.com/rstudio/gt/issues/613#issuecomment-772072490

# (simpler solution should be implemented in future versions of {gt})

fmt_if_number <- function(..., digits = 2) {

input <- c(...)

fmt <- paste0("%.", digits, "f")

if (is.numeric(input)) return(sprintf(fmt, input))

return(input)

}Providing More Than Point Estimates

Imagine you are an analyst for a business to business (B2B) seller and are responsible for identifying appropriate prices for complicated products with non-standard selling practices1. If you have more than one or two variables that influence price, statistical or machine learning models offer useful techniques for determining the optimal way to combine features to pinpoint expected prices of future deals2 (of course margin, market positioning, and other business considerations also matter).

You might first build a model for expected sale price that is fit based on the patterns in historical sales data. The naive approach would be to use point estimates from this model as a reference. If a customer’s offer falls above the expected price, you interpret this as a “good” deal, if below, a “bad” deal.

As a stand-in for the large complicated products typically sold in B2B markets, I will use data on home sales from Ames, Iowa for my examples3.

Load in the data and then split it into a training dataset (for exploration and model development) and a validation dataset (holdout dataset not used in model training, reserved for evaluating model performance)4.

ames <- make_ames() %>%

mutate(Years_Old = Year_Sold - Year_Built,

Years_Old = ifelse(Years_Old < 0, 0, Years_Old))

set.seed(4595)

data_split <- initial_split(ames, strata = "Sale_Price", p = 0.75)

ames_train <- training(data_split)

ames_holdout <- testing(data_split) Specify preprocessing steps5 and a multiple linear regression model6 to predict Sale Price – actually \(\log_{10}{(Sale\:Price)}\)7.

lm_recipe <-

recipe(

Sale_Price ~ Lot_Area + Neighborhood + Years_Old + Gr_Liv_Area + Overall_Qual + Total_Bsmt_SF + Garage_Area,

data = ames_train

) %>%

step_log(Sale_Price, base = 10) %>%

step_log(Lot_Area, Gr_Liv_Area, base = 10) %>%

step_log(Total_Bsmt_SF, Garage_Area, base = 10, offset = 1) %>%

step_novel(Neighborhood, Overall_Qual) %>%

step_other(Neighborhood, Overall_Qual, threshold = 50) %>%

step_dummy(Neighborhood, Overall_Qual) %>%

step_interact(terms = ~contains("Neighborhood")*Lot_Area)

lm_mod <- linear_reg() %>%

set_engine(engine = "lm") %>%

set_mode("regression")Put together into a workflow and fit model:

lm_wf <- workflows::workflow() %>%

add_model(lm_mod) %>%

add_recipe(lm_recipe) %>%

fit(ames_train)Then make predictions on the holdout set.

data_preds <- predict(

workflows::pull_workflow_fit(lm_wf),

workflows::pull_workflow_prepped_recipe(lm_wf) %>% bake(ames_holdout)

) %>%

bind_cols(relocate(ames_holdout, Sale_Price)) %>%

mutate(.pred = 10^.pred) %>%

select(Sale_Price, .pred, Lot_Area, Neighborhood, Years_Old, Gr_Liv_Area, Overall_Qual, Total_Bsmt_SF, Garage_Area)The one-row table below shows that given the case of

Lot_Area= 31,770sqft ;Neighborhood= North Ames;Years_Old= 50yrs;Gr_Liv_Area: 1,656sqft;overall_Qual= “Above Average”;Total_Bsmt_SF= 1,080sqft;Garage_Area= 528sqft,

the model would predict the Sale_Price for the home to be $184,503.

data_preds %>%

select(-Sale_Price) %>%

head(1) %>%

gt::gt() %>%

gt::fmt(gt::everything(), fns = function(x) fmt_if_number(x, digits = 0))| .pred | Lot_Area | Neighborhood | Years_Old | Gr_Liv_Area | Overall_Qual | Total_Bsmt_SF | Garage_Area |

|---|---|---|---|---|---|---|---|

| 184503 | 31770 | 1 | 50 | 1656 | 6 | 1080 | 528 |

Using the naive approach, whether an offer is above or below this expected price may provide a first indication of whether the deal is ‘good’ or ‘bad’ for the seller8. The magnitude of the difference between the offer and the expected price is also important. However you often will care about how big this difference is relative to the underlying uncertainty in Sale_Price – which calls for the use of statistics, specifically predictive inference9.

Considering Uncertainty

Let’s consider a pretend offer of $180,000 for our example house. You might first think you ought to reject the offer due to it being ~$4,500 less than the expected price. However a less naive approach would be to compare the $180,000 offer in the context of the model’s observed accuracy when making predictions on a holdout set10.

We need to be careful regarding how we consider performance and the errors of our model. For this particular problem, the variability in our model’s errors increase with the magnitude of the predictions for Sale_Price11. We want an error metric that will not inflate with Sale_Price. Hence rather than review the difference between Sale_Price and Expected[Sale_Price], it is more appropriate to review either:

- \(\log(Sale\_Price)\) against \(Expected[\log(Sale\_Price)]\) OR

- an error metric that is in terms of percent of

Sale_Price

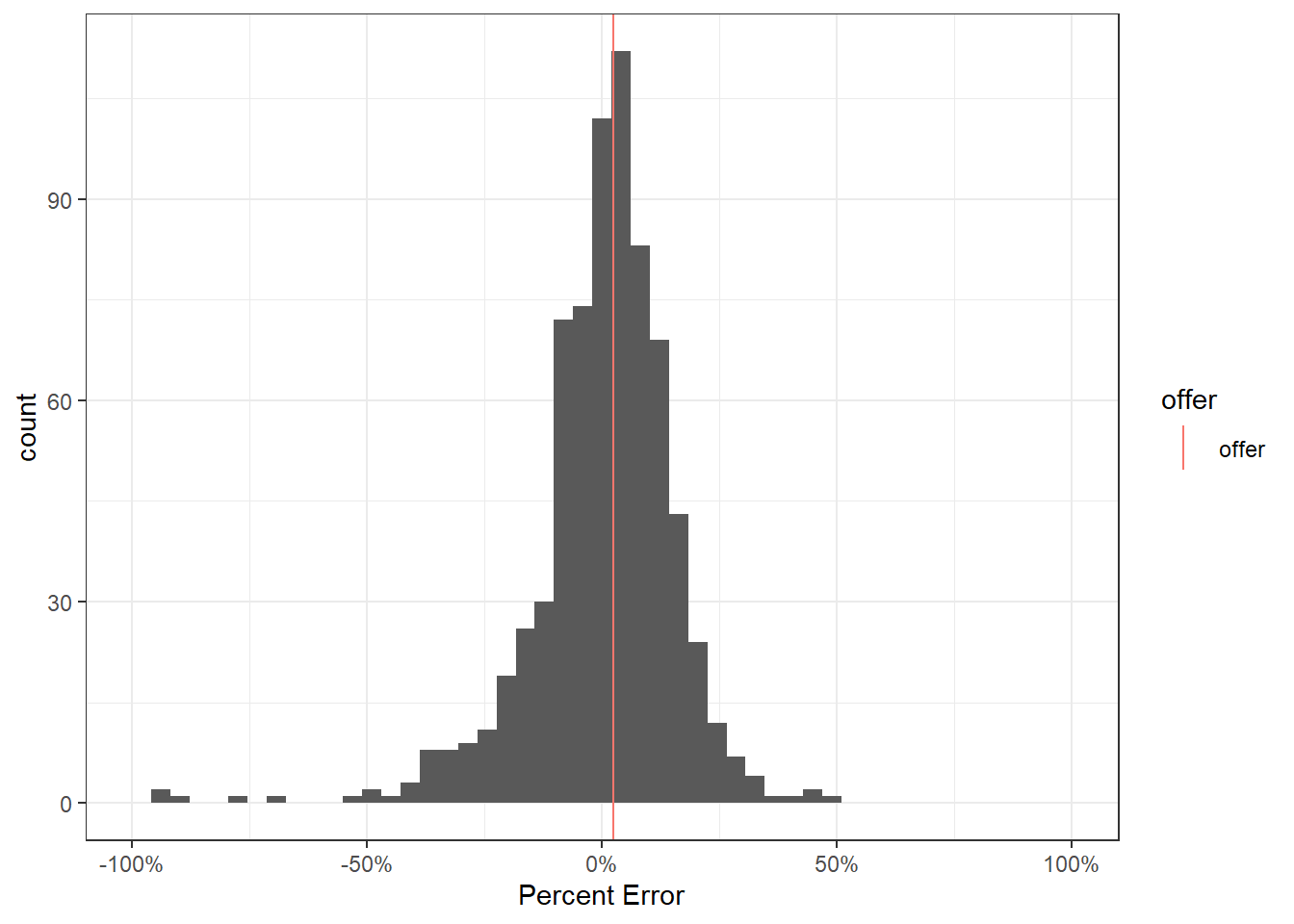

I will use the latter method and focus on the percentage error12. The offer of $180,000 corresponds with a 2.5% difference from expectations. This falls well within the distribution of errors seen by our model on a holdout dataset13.

offer <- tibble(PE = (184503 - 180000) / 180000, offer = "offer")

data_preds %>%

mutate(PE = (Sale_Price - .pred) / Sale_Price) %>%

relocate(PE) %>%

ggplot(aes(x = PE))+

geom_histogram(bins = 50)+

geom_vline(aes(xintercept = PE, color = offer), data = offer)+

scale_x_continuous(limits = c(-1, 1), labels = scales::percent)+

labs(x = "Percent Error")+

theme_bw()

On average our model is off by around ~12% of the actual Sale_Price observed.

data_preds %>%

yardstick::mape(Sale_Price, .pred) %>%

gt::gt() %>%

gt::fmt(gt::everything(), fns = function(x) fmt_if_number(x, digits = 1))| .metric | .estimator | .estimate |

|---|---|---|

| mape | standard | 11.8 |

90% of errors on the holdout dataset were between -27.1% and 21.3% of the actual Sale_Price.

data_preds %>%

mutate(PE = (Sale_Price - .pred) / Sale_Price,

APE = abs(PE)) %>%

summarise(quant_05 = quantile(PE, 0.05) * 100,

quant_95 = quantile(PE, 0.95) * 100) %>%

gt::gt() %>%

gt::fmt(gt::everything(), fns = function(x) fmt_if_number(x, digits = 1))| quant_05 | quant_95 |

|---|---|

| -27.1 | 21.3 |

All of which suggests the $180,000 offer does not represent a substantial outlier from the typical variability of observed from predicted Sale_Price14.

Is your model performant enough to be useful?

Before using the model for predictive inference, one should have reviewed overall performance on a holdout dataset to ensure the model is sufficiently accurate for the business context. For example, for our problem is an average error of ~12% and 90% prediction intervals of +/- ~25% of Sale_Price useful? If the answer is “no,” that suggests the need for more effort in improving the accuracy of the model (e.g. trying other transformations, features, model types)15. For our examples we are assuming the answer is ‘yes,’ our model is accurate enough (so it is appropriate to move-on and focus on prediction intervals).

Observation Specific Intervals

While a good starting point, a limitation with using aggregate error metrics to estimate intervals indiscriminately across observations16 is that different attributes may associate with different levels of uncertainty in the prediction. Specifically, predictions further from the centroid of the data generally have more uncertainty in the expected price.

A toy seesaw is a good analogy for this phenomenon:

As the angle of the bench changes… the further you are from the center, the more distance you will move up/down →

The angle of the seesaw represents variability in the model function being estimated. The distance from the seesaw’s pivot point corresponds with the distance from the centroid of the data.

This variability in estimating the model’s expected value is represented by dashed blue lines in the chart below of a generic linear model:

Because of this varying uncertainty of the expected value of the model (represented by the differing interval widths encapsulated by the blue lines at any value of x), it is preferable to determine a plausible price range that is specific to the attributes of the observation17.

The uncertainty described here corresponds with the confidence intervals and not quite as directly with the prediction intervals (the focus of this post). The distinction and relationship between these will be discussed in A Few Things to Know About Prediction Intervals.

A Few Things to Know About Prediction Intervals

If you are primarily interested in how to build and review prediction intervals you can skip to Review Prediction Intervals.

Prediction Intervals and Confidence Intervals

Prediction intervals and confidence intervals18 are often confused. Confidence intervals generally refer to making inferences on averages – this is most useful for evaluating parameter estimates, performance metrics, relationships with covariates, etc. However if you are interested in price ranges on individual observations (as in our case), prediction intervals are what you want19. Rob Hyndman has a helpful post where he describes the differences in more detail: The difference between prediction intervals and confidence intervals20.

In linear regression, “prediction intervals” refer to a type of confidence interval21, namely the confidence interval for a single observation (a “predictive confidence interval”). Confidence intervals have a specific statistical interpretation. In later posts on this topic, the intervals I create do not quite mirror the interpretations that go with a predictive confidence interval. I will use the term “prediction interval” somewhat loosely to refer to a plausible range of values for an observation22.

Analytic Method of Calculating Prediction Intervals

Linear regression models can produce prediction intervals analytically. The equation below is for simple linear regression (meaning just one ‘x’ input) but is helpful for gaining an intuition on the key parts that contribute to the width of a prediction interval:

\(\hat{y}_h \pm t_{(1-\alpha/2, n-2)} \times \sqrt{MSE \times \left( 1+\dfrac{1}{n} + \dfrac{(x_h-\bar{x})^2}{\sum(x_i-\bar{x})^2}\right)}\)

Pseudo-translation of key parts of equation:

- {\(\hat{y}_h\) : prediction}

- {\(t_{(1-\alpha/2, n-2)}\) : multiplier for desired level of confidence, e.g. 95%}

- {MSE: multiplier for average error}

- {\(\dfrac{MSE}{n}\) : multiplier based on number of observations – smaller n contributes to greater variability.}

- {\(MSE\dfrac{(x_h-\bar{x})^2}{\sum(x_i-\bar{x})^2}\) : multiplier for distance from centroid of data}

You will notice the equation for the prediction interval is very similar to that of the equation for a confidence interval23:

\(\hat{y}_h \pm t_{(1-\alpha/2, n-2)} \times \sqrt{MSE \left(\dfrac{1}{n} + \dfrac{(x_h-\bar{x})^2}{\sum(x_i-\bar{x})^2}\right)}\)

The variance for the prediction interval just has an extra Mean Squared Error (MSE) term24. The prediction interval is essentially the variance in estimating the model25 combined with the variability of individual observations in the sample.

Paraphrasing, the uncertainty in predictions can be thought of as coming from two sources:

- uncertainty in fitting the model, i.e. variability in determining the expected value of the target (‘target’ just means the variable of interest, the thing we are predicting)

- uncertainty in the sample, i.e. inherent variability of the target in observations

The first source may vary in part depending on position relative to the centroid (think back to the seesaw analogy in Observation Specific Intervals and the influence of position on amount of movement i.e. uncertainty). It will also vary depending on the number of observations – the more observations you have in your training data, the smaller the general variability due to model estimation will be. The latter part is assumed to be constant across observations26 and does not change as the number of observations increases.

Do not think of these sources of uncertainty as being compounded on one another to produce the prediction interval. They are added together27 and then the square root is taken. In most cases the variability due to the sample will be greater than the variability in estimating the expected value. Hence, the interval’s width due to uncertainty in estimating the model is somewhat ‘watered down’ by the variability in the sample. Therefore (for prediction intervals produced analytically at least) we will generally not see large differences in the widths of prediction intervals across observations (even at points that are relatively far from the centroid of the data). Therefore our prediction intervals in later sections of this post will not actually be that much different from those that might have been produced using aggregated error metrics similar to those discussed in Considering Uncertainty.

However understanding the intuition on these sources of uncertainty in prediction intervals is still helpful. In follow-up posts I use more flexible methods for building prediction intervals. These other methods can produce substantive differences in the width of prediction intervals between observations (in ways that depend on more than distance of an observation from the centroid of the data).

Visual Comparison of Prediction Intervals and Confidence Intervals

The chart below shows the key outputs concerning prediction that can be outputted by a generic linear model:

- expected value, i.e. the predictions, the average of the value of the target given a set of attributes (black line)

- confidence intervals, i.e. range for the expected values (red dashed line)

- prediction intervals (our primary interest in this post), i.e. range for the predictions on an individual observation (green dotted line)

Confidence intervals are more narrow (as they concern only uncertainty in the model’s estimation of the expected value). Prediction intervals are wider as they concern both model uncertainty and the sampling uncertainty of any observation (the latter of which contributes far greater variance). Both confidence and prediction intervals are wider the further the prediction is from the centroid of the data – however the effect is far greater for the confidence interval (as the uncertainty in the prediction interval is dominated by the random variance of the sample, which is assumed to be constant across observations). This explains why the curve of the confidence intervals is far more pronounced.

Inference or Prediction?

Predictive modeling is typically used either for making predictions or inferences. The assumptions associated with your model usually matter more when doing inference compared to prediction28. Therefore, to get a sense of how careful you should be regarding model assumptions for our example you might ask:

“Are prediction intervals an example of prediction or inference?”

The answer is, while it (kind of) depends…

really, prediction intervals should be thought of as a kind of inference.

For this post the prediction interval is explicitly an inference on the predictions. In a later post I will actually just be making predictions for quantiles at upper and lower bounds of interest. However, generally, intervals should be thought of as a kind of inference29. Therefore you should be thoughtful regarding the assumptions you are making about the nature of the data and your model specification30.

For example, an important assumption when doing predictive inference is heteroskedasticity of errors – which means that the variability of our errors should be constant across our predictions31. As mentioned in Considering Uncertainty, if building a model for Sale Price we would find that errors tend to increase with our predictions. Hence instead of building a model to predict Sale Price we build a model to predict \(\log_{10}(Sale\:Price)\) – this helps our model’s outputted prediction intervals to be more appropriate across observations32.

In follow up posts the methods I describe do not depend as strongly on assumptions to produce reliable prediction intervals33.

Cautions With Overfitting

You want the inferences and predictions of your model to be generalizable, i.e. usable outside of the context of your training dataset. This is why we holdout data to review performance: we want to review the model’s performance on data it has never seen. If your model overfits34 the training data, the prediction interval will often underestimate the expected variability in price and your prediction intervals may be too narrow. Two strategies you might use to correct for this:

- Consider a more simple model35

- Tune your prediction intervals based on coverage measured on holdout data (coverage represents the proportion of observations that actually fall within their prediction interval).

A quick heuristic to check if your model is overfitting is to compare the model’s performance on a holdout dataset against performance on the data that was used to train the model. If performance is substantially better on the training dataset, it may suggest the model has overfit.

Simpler model

If your model seems to be overfitting, you may want to try fitting a more simple model36. If your model has a small difference in performance metrics between training and holdout datasets, you likely will have more faith in the ranges given by the prediction intervals.

Problem with comparing difference in performance between training & holdout data

However, for the most part, your performance is going to always be better on the training data than on the holdout data37. With regard to overfitting, you really care about whether performance is worse on the holdout dataset compared to an alternative simpler model’s performance on the holdout set. You don’t really care if a model’s performance on training and holdout data is similar, just that performance on a holdout dataset is as good as possible.

Let’s take an example when building random forest models and tuning on the min_n attribute (smaller values for min_n38 represent more complicated models.):

See my gist for code

The gap in train-holdout (AKA, analysis-assessment39) performance is greater for more complicated models40 (which, as mentioned above, may signal overfitting). However more complicated models also perform better on the holdout data. Hence, we would likely select the complicated model41 even though the performance estimates on the training data will be the most unrealistic.

While a difference in performance on training and holdout datasets may provide a helpful indicator for overfitting, it is not really what you care about during model selection42.

Tune your intervals based on a holdout set

Pretend that your selected model has a substantial difference in performance when evaluated on training versus holdout data. You are worried that the width of your model’s outputted prediction intervals will be optimistically narrow. However you still want to keep this model because it has the best performance when evaluated on holdout data43. An alternative to fitting a more simple model is to adjust the confidence level of your prediction intervals, tuning them based on the coverage level on a holdout dataset. To describe this solution, I will define three ways of thinking about coverage:

- target coverage: The level of coverage you want to attain on a holdout dataset (i.e. the proportion of observations you want to fall within your prediction intervals).

- expected coverage: The level of confidence in the model for the prediction intervals, e.g. asking the model to predict 90% prediction intervals.

- empirical coverage: The level of coverage actually observed when evaluated on a dataset, typically a holdout dataset not used in training the model.

Ideally all three align. However let’s say in our hypothetical example you have a 90% target coverage. If our model is slightly overfit, you might see that a 90% expected coverage leads to an 85% empirical coverage on a holdout dataset. To align your target and empirical coverage at 90%, may require setting expected coverage at something like 93%44.

This is called “adaptive coverage” and is discussed briefly by Emmanuel Candes in the video below:

In a follow-up post, Quantile Regression for Prediction Intervals I will walk through a similar example where I tune the expected coverage.

Generalizability

Let’s check to see how performance of our model compares between our training and holdout datasets.

train_preds <- predict(

workflows::pull_workflow_fit(lm_wf),

workflows::pull_workflow_prepped_recipe(lm_wf) %>% bake(ames_train)

) %>%

bind_cols(relocate(ames_train, Sale_Price)) %>%

mutate(.pred = 10^.pred)

bind_rows(

yardstick::mape(train_preds, Sale_Price, .pred),

yardstick::mape(data_preds, Sale_Price, .pred)

) %>%

mutate(dataset = c("training", "validation")) %>%

gt::gt() %>%

gt::fmt(gt::everything(), fns = function(x) fmt_if_number(x, digits = 1))| .metric | .estimator | .estimate | dataset |

|---|---|---|---|

| mape | standard | 11.3 | training |

| mape | standard | 11.8 | validation |

Performance on the training and holdout datasets are not grossly different, hence there does not seem to be a major concern with overfitting in our model45.

Review Prediction Intervals

90% prediction interval for our example case:

preds_intervals <- predict(

workflows::pull_workflow_fit(lm_wf),

workflows::pull_workflow_prepped_recipe(lm_wf) %>% bake(ames_holdout),

type = "pred_int",

level = 0.90

) %>%

mutate(across(contains(".pred"), ~10^.x)) %>%

bind_cols(data_preds) %>%

select(contains(".pred"), Sale_Price, Lot_Area, Neighborhood, Years_Old, Gr_Liv_Area, Overall_Qual, Total_Bsmt_SF, Garage_Area)

# attr(preds_intervals, "level") <- NULL

preds_intervals %>%

select(-Sale_Price) %>%

slice(1) %>%

gt::gt() %>%

gt::fmt(gt::everything(), fns = function(x) fmt_if_number(x, digits = 0))| .pred_lower | .pred_upper | .pred | Lot_Area | Neighborhood | Years_Old | Gr_Liv_Area | Overall_Qual | Total_Bsmt_SF | Garage_Area |

|---|---|---|---|---|---|---|---|---|---|

| 140208 | 242793 | 184503 | 31770 | 1 | 50 | 1656 | 6 | 1080 | 528 |

This suggests that, given these attributes, we would expect that the Sale_Price for an individual observation would fall between $140,208 and $242,793 (with 90% confidence). Our example offer of $184,000 falls well within this range, meaning that – based on the model – the offer does not appear unreasonable.

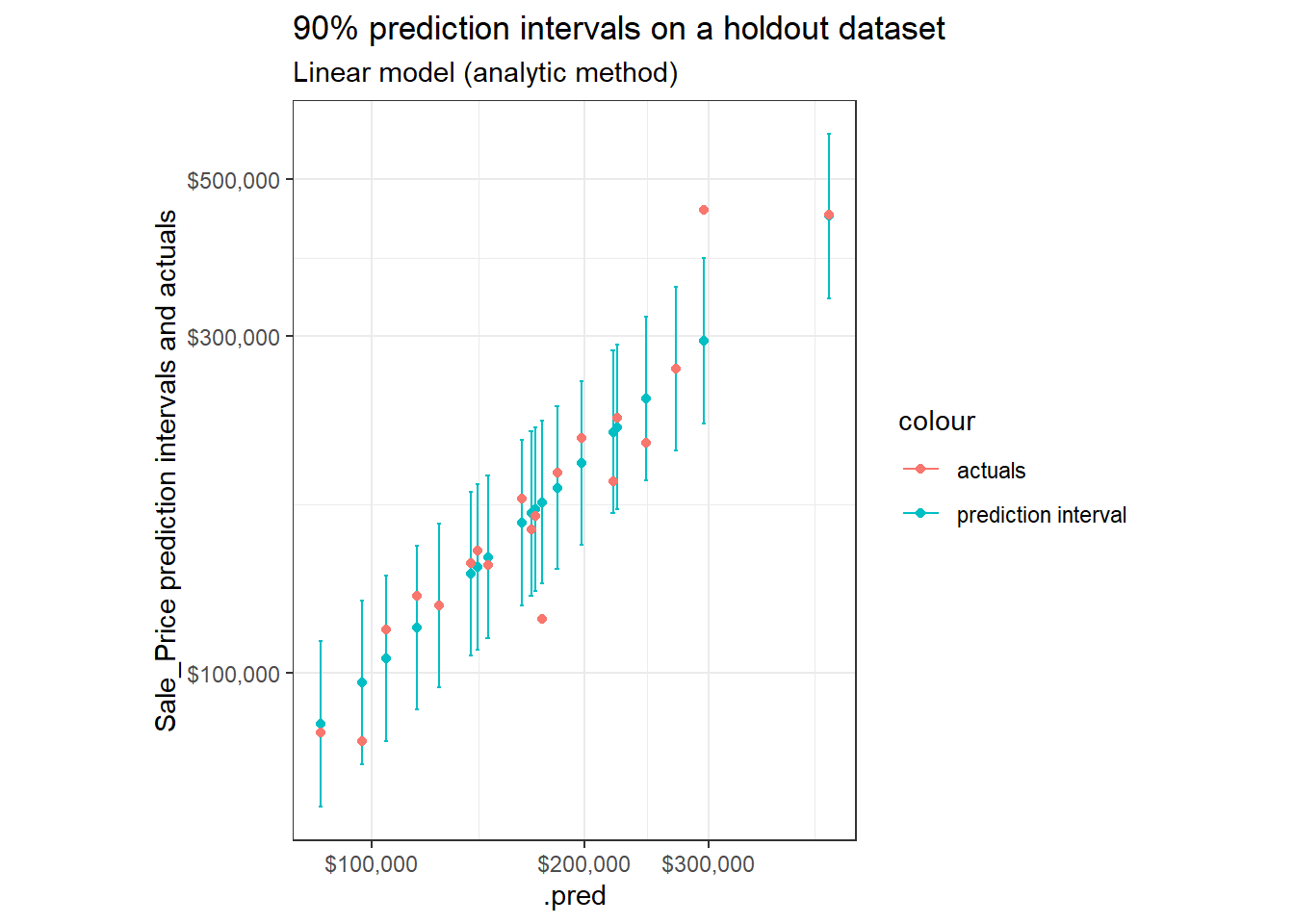

Let’s view 90% prediction intervals against actual prices on a sample of observations in our holdout dataset:

set.seed(1234)

p <- preds_intervals %>%

mutate(pred_interval = ggplot2::cut_number(Sale_Price, 10)) %>%

group_by(pred_interval) %>%

sample_n(2) %>%

ggplot(aes(x = .pred))+

geom_point(aes(y = .pred, color = "prediction interval"))+

geom_errorbar(aes(ymin = .pred_lower, ymax = .pred_upper, color = "prediction interval"))+

geom_point(aes(y = Sale_Price, color = "actuals"))+

labs(title = "90% prediction intervals on a holdout dataset",

subtitle = "Linear model (analytic method)",

y = "Sale_Price prediction intervals and actuals")+

theme_bw()+

coord_fixed()

p +

scale_x_log10(labels = scales::dollar)+

scale_y_log10(labels = scales::dollar)

Log transformations are applied on the x and y axis. Remember the model was built to predict \(\log_{10}{(Sale\:Price)}\) (as opposed to raw Sale_Price)46.

We would expect ~9 out of 10 observations to fall within their bands – which a cursory glance appears to be just about what we see.

Coverage

Let’s review what proportion of our holdout observations are actually “covered” by their 90% prediction intervals47.

preds_intervals %>%

mutate(covered = ifelse(Sale_Price >= .pred_lower & Sale_Price <= .pred_upper, 1, 0)) %>%

summarise(n = n(),

n_covered = sum(

covered

),

stderror = 100 * sd(covered) / sqrt(n),

coverage_prop = 100 * n_covered / n) %>%

gt::gt() %>%

gt::fmt(gt::everything(), fns = function(x) fmt_if_number(x, digits = 2))| n | n_covered | stderror | coverage_prop |

|---|---|---|---|

| 731.00 | 678.00 | 0.96 | 92.75 |

~93% of our holdout observations were actually covered48. We can likely trust these intervals aren’t too narrow49. It is usually better to be on the slightly conservative than optimistic side regarding coverage50.

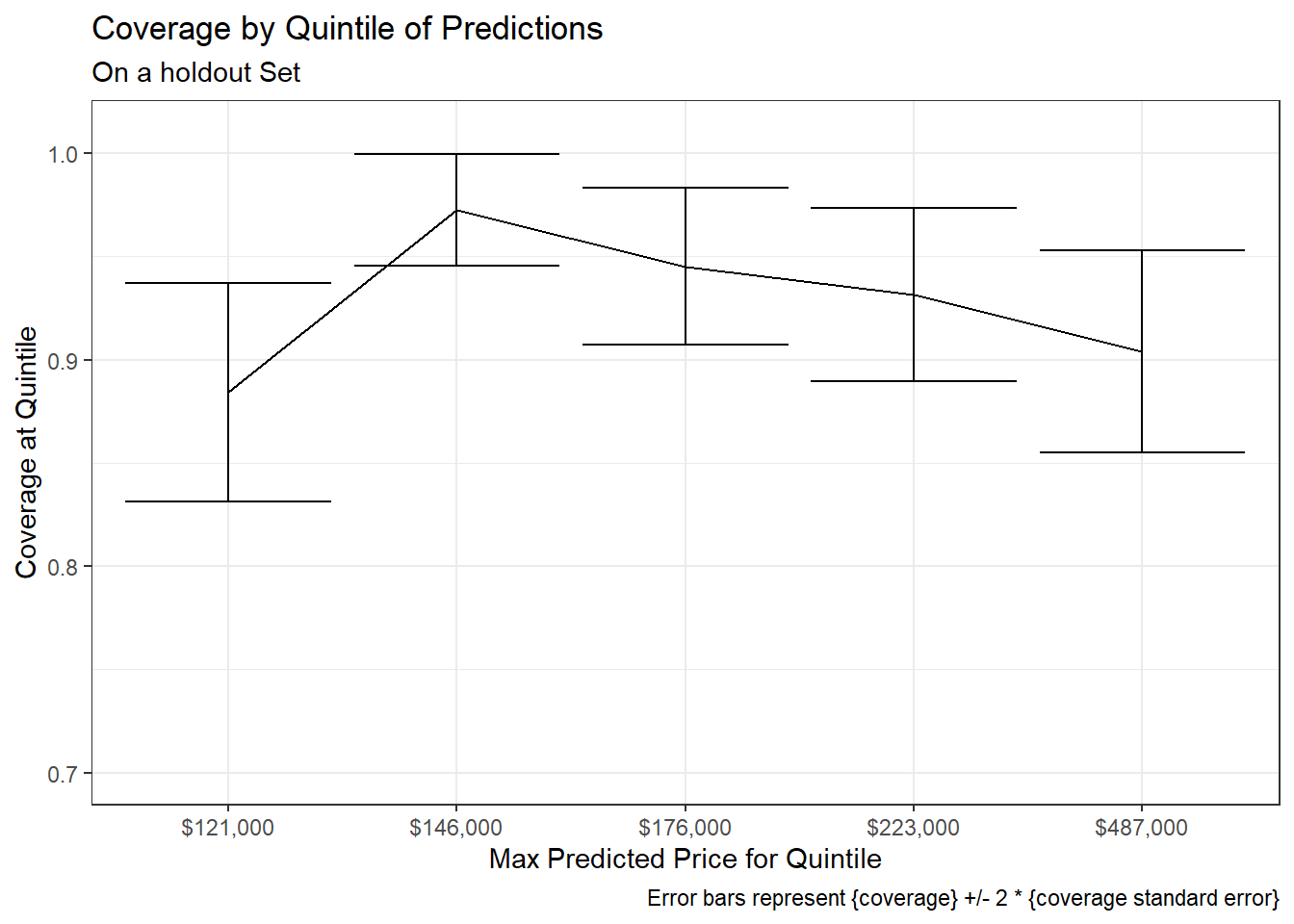

In addition to reviewing overall coverage, it may be helpful to review coverage across predictions. This may be helpful in providing evidence of whether we have “marginal” or “conditional” coverage51.

The chart below splits the holdout data into five segments ordered by predicted price52 with equal number of observations53 and checks the proportion covered over each quintile.

preds_intervals %>%

mutate(price_grouped = ggplot2::cut_number(.pred, 5)) %>%

mutate(covered = ifelse(Sale_Price >= .pred_lower & Sale_Price <= .pred_upper, 1, 0)) %>%

group_by(price_grouped) %>%

summarise(n = n(),

n_covered = sum(

covered

),

stderror = sd(covered) / sqrt(n),

n_prop = n_covered / n) %>%

mutate(x_tmp = str_sub(price_grouped, 2, -2)) %>%

separate(x_tmp, c("min", "max"), sep = ",") %>%

mutate(across(c(min, max), as.double)) %>%

ggplot(aes(x = forcats::fct_reorder(scales::dollar(max), max), y = n_prop))+

geom_line(aes(group = 1))+

geom_errorbar(aes(ymin = n_prop - 2 * stderror, ymax = n_prop + 2 * stderror))+

coord_cartesian(ylim = c(0.70, 1.01))+

# scale_x_discrete(guide = guide_axis(n.dodge = 2))+

labs(x = "Max Predicted Price for Quintile",

y = "Coverage at Quintile",

title = "Coverage by Quintile of Predictions",

subtitle = "On a holdout Set",

caption = "Error bars represent {coverage} +/- 2 * {coverage standard error}")+

theme_bw()

This suggests there may be slightly different levels of coverage at different quintiles (e.g. the cheapest predicted houses have less coverage than those in the 2nd quintile.)54.

A test for checking whether variability in the categorical variable covered (covered / not-covered) does not vary across the quintile of predicted Sale_Price (1st, 2nd, 3rd, 4th, 5th) could be done using a Chi-square statistical test:

preds_intervals %>%

mutate(price_grouped = ggplot2::cut_number(.pred, 5)) %>%

mutate(covered = ifelse(Sale_Price >= .pred_lower & Sale_Price <= .pred_upper, 1, 0)) %>%

with(chisq.test(price_grouped, covered)) %>%

pander::pander()| Test statistic | df | P value |

|---|---|---|

| 10.39 | 4 | 0.03436 * |

The results provide evidence that whether an observation is covered or not-covered may depend (to some extent) on which quintile of predicted Sale_Price the observation falls within55.

I will show in a follow-up post on Simulating Prediction Intervals intervals that seem to have more consistent coverage rates across predicted Sale_Price.

Interval Width

Another helpful way to evaluate prediction intervals is by their width. More narrow bands indicate a more precise model.

Remember from the section on Considering Uncertainty that the errors on raw Sale_Price increase with the size of the prediction. To adjust for this, an appropriate metric is interval width as a percentage of predicted Sale_Price56. I will review these across quintiles of the predictions.

lm_interval_widths <- preds_intervals %>%

mutate(interval_width = .pred_upper - .pred_lower,

interval_pred_ratio = interval_width / .pred) %>%

mutate(price_grouped = ggplot2::cut_number(.pred, 5)) %>%

group_by(price_grouped) %>%

summarise(n = n(),

mean_interval_width_percentage = mean(interval_pred_ratio),

stdev = sd(interval_pred_ratio),

stderror = stdev / sqrt(n)) %>%

mutate(x_tmp = str_sub(price_grouped, 2, -2)) %>%

separate(x_tmp, c("min", "max"), sep = ",") %>%

mutate(across(c(min, max), as.double)) %>%

select(-price_grouped)

lm_interval_widths %>%

mutate(across(c(mean_interval_width_percentage, stdev, stderror), ~.x*100)) %>%

gt::gt() %>%

gt::fmt_number(c("stdev", "stderror"), decimals = 2) %>%

gt::fmt_number("mean_interval_width_percentage", decimals = 1)| n | mean_interval_width_percentage | stdev | stderror | min | max |

|---|---|---|---|---|---|

| 147 | 54.3 | 0.46 | 0.04 | 43000 | 121000 |

| 146 | 54.0 | 0.29 | 0.02 | 121000 | 146000 |

| 146 | 54.0 | 0.38 | 0.03 | 146000 | 176000 |

| 146 | 54.1 | 0.49 | 0.04 | 176000 | 223000 |

| 146 | 54.1 | 0.33 | 0.03 | 223000 | 487000 |

The 90% interval represents an average of ~54% of the predicted Sale_Price. I.e. the difference between the upper and lower bounds of the prediction interval for Sale_Price will be about half of the predicted Sale_Price (to eye-ball this, see Prediction Intervals on Raw Sale Price).

The relative interval width seems to be consistent across values for predicted Sale_Price (~54% for all quintiles). There is also little difference in uncertainty between observations – varying on average less than half a percent between observations as indicated by the standard deviation.

Recall from Analytic Method of Calculating Prediction Intervals that the only factor contributing to differences in interval width across predictions is the distance of an observations from the centroid of the data. This has a small impact on relative interval width in our problem (given the number of observations and sample variance – which are treated as constant across observations)57. Methods used in future posts will show a greater diversity of interval widths.

Closing Notes

Prediction intervals provide an indicator for the uncertainty of individual predictions. They have advantages over point estimates in that they take into account the variability in the data to provide a “reasonable” range of values for an observation.

Measures like coverage and interval width are helpful for evaluating prediction intervals. These metrics will be used in follow-up posts on Simulating Prediction Intervals and Quantile Regression for Prediction Intervals, where I walk through more sophisticated and flexible methods for building prediction intervals.

Appendix

Bayesian Inference

In this and the immediate follow-up posts I approach prediction intervals from a more frequentist perspective. However when you really care about thinking through the nature of uncertainty in your model, Bayesian methods are often more appropriate. I may cover Bayesian approaches in a future post, in the meantime, here are a few resources:

- The stan computing platform for Bayesian modeling and inference has various excellent R package interfaces. rstan, rstanarm, brms and tidybayes are the ones of greatest interest to me58.

- Michael Clark provides a helpful applied introduction to Bayesian analysis in R.

- Note also that you can interact with stan through the

parsnippackage by settingparsnip::set_engine(engine = "stan")for linear models. - There is also a python interface, PyStan. The most popular Bayesian library in python however is PyMC359. You might also check-out Tensorflow Probability60.

Pros & Cons

Upsides of prediction intervals using parametric methods with linear regression:

- Most common (largest number of people are familiar)

- Straightforward calculation with low computation costs

Downsides:

- Less flexible than alternative approaches.

- Prediction intervals produced in this way have a variety of strong assumptions that they depend on (generally more heavily than other approaches).

- Assumes the correct model (If your model overfits, the associated prediction intervals will likely be overly optimistic61).

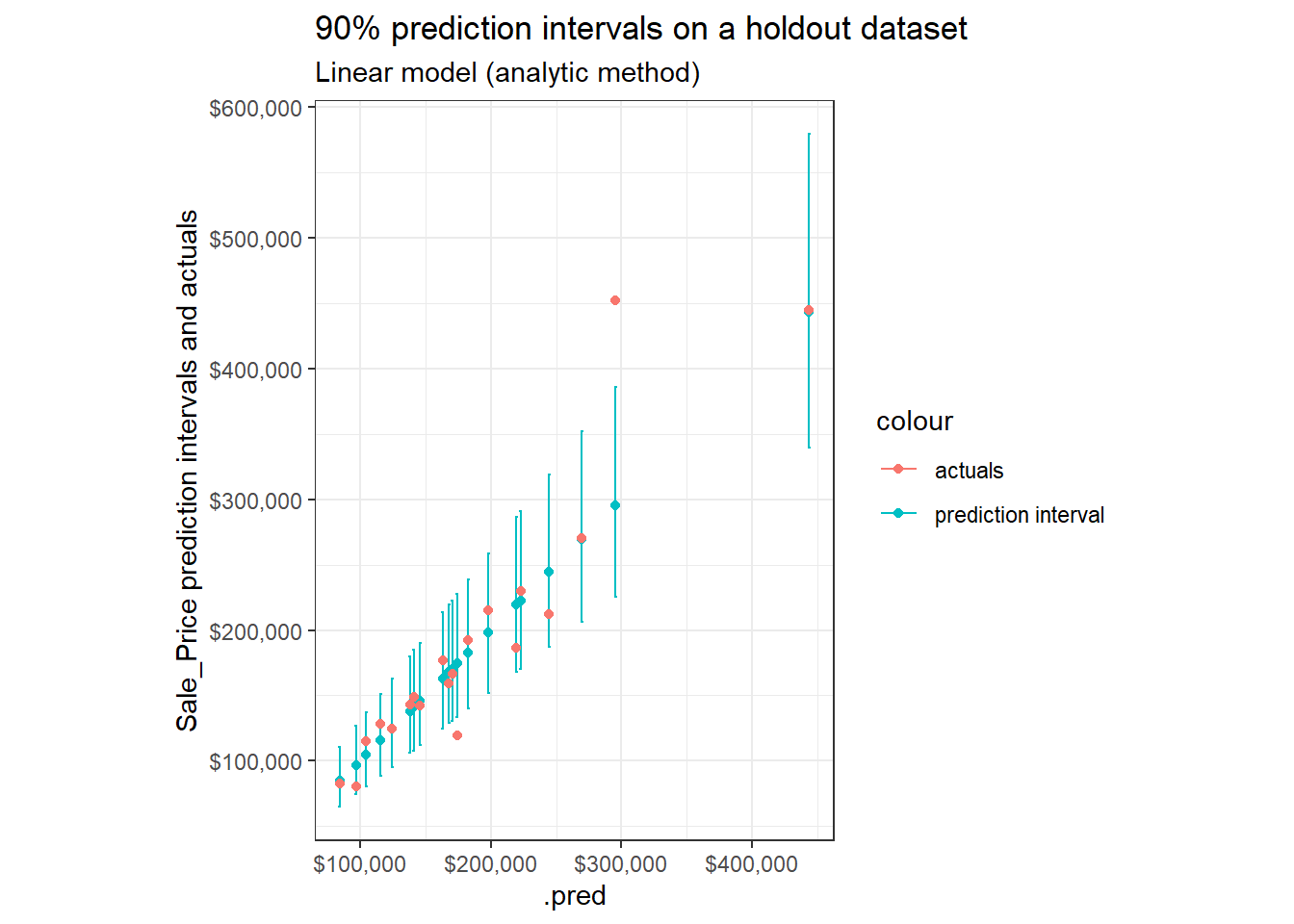

Prediction Intervals on Raw Sale Price

Because the model is on \(\log_{10}{(Sale\:Price)}\) when we transform our scale to be in terms of Sale_Price, the errors would increase with the predicted price of the house – hence why the transformed scales in Review Prediction Intervals are appropriate. The figure below shows the prediction intervals on the scale of raw Sale_Price.

p +

scale_x_continuous(labels = scales::dollar)+

scale_y_continuous(labels = scales::dollar)

parsnip Support for Prediction Intervals

Run the following code to check which parsnip supported models have modules for prediction intervals or quantiles (cross-posted here)".

library(tidyverse)

envir <- parsnip::get_model_env()

ls(envir) %>%

tibble(name = .) %>%

filter(str_detect(name, "_predict")) %>%

mutate(prediction_modules = map(name, parsnip::get_from_env)) %>%

unnest(prediction_modules) %>%

filter(str_detect(type, "pred_int|quantile"))In future posts, I will use some approaches that are not directly (currently) supported by predict methods in parsnip.

Non-standard, negotiated prices are common in the B2B world.↩︎

Analysts are sometimes tempted to compare the specific deal of interest against only historical deals that have similar attributes. The problem with attempting to do this is that, when you filter you lose data. After filtering across more than a couple dimensions, you likely will only have a few deals left in your sub-segment – too few to get a reliable sense of price variability. Prediction intervals offer a method for determining the variability of your estimates that spans across the multi-dimensional space that your data inhabits.

That said there are many situations where it does make sense to segment your data. Also the errors in your model should be independent from one another. During residual analysis you should ensure that there is not any bias across particular dimensions or ranges of data. Hence looking at particular segments of observations can be important – it’s just important to do so thoughtfully.

I’ve seen many occassions when an analyst keeps slicing their data and reviewing univariate relationships until they’ve convinced themselves they’ve found something – even when the sample sizes at this point are small and the nature of the relationship unconvincing.↩︎

This is similar to what I did on a previous post on pricing.↩︎

If I was being a little more careful, I’d also split the

traindata into a validation set or I’d usersampleto set-up cross validation. In this post though I largely usetesthow you might use a validation set though. See Tidy Modeling with R, 10.2 for more.↩︎It is important that we capture any uncertainty in our preprocessing steps, hence the need for using the recipes package.↩︎

There are many important features I do not include. I am also skipping a lot of important steps in predictive modeling, e.g. data exploration, review of model assumptions, use of a separate validation set, feature engineering, tuning, etc.].↩︎

A log transform on the target can be thought of roughly as changing the model to be about changes in percent of

Sale_Pricerather than rawSale_Price. Throughout the post I will be performing a log transformation on our target,Sale_Priceprior to modeling. Hence in some cases I make comparisons against the log of dollars offered OR I will use some metric that is in terms of percent rather than rawSale_Price. It is generally best to conduct model evaluation against whatever transformed scale is used in model building (i.e. in our caselog(Sale_Price, 10)). This is suggested in Tidymodels with R:“It is best practice to analyze the predictions on the transformed scale (if one were used) even if the predictions are reported using the original units.” -Kuhn, Silge

This may not apply perfectly in our case (as we are talking more about evaluation steps that would likely happen after initial model building and selection and has more to do with particular predictions)… however we will still keep this in mind and try to mindful of how we present our evaluation metrics.↩︎

Relative to prior deals which the model was trained on.↩︎

When you are dealing with problems that consider both magnitude and variability, statistics are often helpful. Statistics can help to provide a sense of the accuracy of the model and the extent to which an offer seems reasonable in the context of the variability in prices.↩︎

Statistics can provide context as to whether this represents a small or large deviation from expectations.↩︎

This is known as heteroskedasticity. We generally want our error metrics to be homoskedastic, i.e. ‘same variance’. This is why we built the model on the target

log(Sale_Price, 10). Note that the base rate of 10 doesn’t really matter that much, we could just have easily have built the model using the natural logarithm and gotten similar results.↩︎I chose this method as I thought it would be easier to explain and some readers may be intimated by logs. During model building and evaluation the former would often be more appropriate to review. In particular the root mean squared error (RMSE) of the errors of the predictions for

log(Sale_Price).↩︎Data not seen during model training and reserved for the purpose of model evaluation↩︎

Based on the observation level estimates given by our model on a holdout set.↩︎

Or a focus on other pieces of information outside of your model for making decisions, or going about addressing the problem in another way or redirecting your efforts towards a problem you can make more of an impact on.↩︎

As shown above in Considering Uncertainty.↩︎

Rather than just looking at your observation of interest through the lens of aggregate error metrics.↩︎

Or when using Bayesian methods, credible intervals.↩︎

Confidence intervals are more directly related to the standard error of your sample, while prediction intervals are associated with the standard deviation of the errors. The width of your confidence intervals follow the central limit theorem and are thus sensitive to the sample size of your data and become more narrow as you collect more observations (and you gain ‘confidence’ in where the ‘true’ expected value resides). For prediction intervals, more observations may improve your estimate of the model or give you more faith in your prediction intervals, however if you have sufficient observations, the width of the intervals will not necessarily shrink in any substantial way.↩︎

Confidence intervals are typically the default output of many packages and functions that output “intervals” – forecast and other forecasting packages though typically default to prediction intervals.↩︎

Again, speaking through a frequentist lens.↩︎

“Predictive band” may be a more appropriate term.↩︎

I.e. the band around the average value, rather than the band around the value for an individual observation – as discussed in A Few Things to Know About Prediction Intervals.↩︎

If this is unclear to you, try distributing the MSE term and look again. Penn State also has helpful lessons on regression available publicly online.↩︎

The square root of which is used to calculate the confidence interval.↩︎

This assumption of constant variability of the sample is common in many techniques for producing prediction intervals – even some techniques that use simulation based approaches.↩︎

To get the variance.↩︎

This is because your assumptions will influence the nature of the uncertainty in your model. When focused on prediction, you generally just care about minimizing error (in one form or another), assumptions regarding distributions of parameters, errors, etc. can often be ignored or at least relaxed.↩︎

Prediction intervals concern uncertainty, and whenever you are making estimates around uncertainty you are generally doing a kind of statistical inference.↩︎

Even when using simulation based techniques that, for other estimates, may allow you to be a little more cavalier regarding model assumptions… when it comes to predictive inference you will essentially always have some assumptions you need to hold onto.↩︎

You may be puzzled why differing uncertainty depending on the distance of a point is from the centroid of the data is not thought of as a kind of heteroskedasticity. My understanding is this is becuase that difference has to do with uncertainty in estimating the model, not uncertainty in the sample (which is assumed to be constant).↩︎

If we did not do this our prediction intervals would be far too large for smaller values of

Sale_Priceand far too small for larger values ofSale_Price.↩︎However there are limitations on assumption free predictive inference, (Barber, et al).↩︎

Model generalizability is a big topic I am going to just barely touch on it here.↩︎

That still fits the data well but is likely to overfit on the training data.↩︎

This is true of some models to a greater extent than others.↩︎

Representing the minimum number of observations in a node for it to be split.↩︎

Feature Engineering and Selection, 3.4 Resampling is a helpful resource for understanding the subtle distinction between these.↩︎

When

min_nis smaller.↩︎Again, when

min_nis smallest.↩︎This is likely the case even when you are planning on using the model to generate prediction intervals and not just point estimates.↩︎

Or some other reason, e.g. you are not able to retrain it or have some business constraint that means you are stuck with this model.↩︎

Tuning your alpha level until the coverage on a holdout dataset is where you want it.↩︎

This is typically the way this check would be used – if there is no difference, you can say there is no overfitting. If there is a difference, you may still be OK with that provided performance is still good enough (or better than alternatives) on a holdout dataset.↩︎

See [Prediction Intervals on Raw Sale_Price] in the Appendix for a chart that does not apply log transformations.↩︎

I.e. the rate at which the actual value fall within the range of the prediction interval (coverage is essentially checking whether the range you expect from your prediction intervals is actually what is achieved). For our 90% prediction intervals, we will expect coverage on a holdout dataset to be 90%.↩︎

The difference between 93% and the expected coverage of 90% may just be the result of random variability in the data.↩︎

Though they are perhaps slightly conservative. To get a better estimate for coverage, we could use cross validation as this will provide estimates for coverage across multiple iterations of model fitting.↩︎

As models tend to perform worse than expected when put into production. I.e. it is better that our empirical coverage is greater than our target coverage than less than our target coverage – even if it would be ideal for these to align.↩︎

“Marginal” coverage generally means “on average across observations”, whereas “conditional” coverage means conditioned on the specific attributes for an observation. Hence “90%” marginal coverage allows that coverage may be higher in some areas and lower in others. 90% conditional coverage means that the conditional rate should be 90% across observations. “Conditional” coverage is generally harder to get than “marginal” coverage and is actually impossible to achieve without some assumptions.↩︎

I ended-up splitting these based on predicted price. I think it may have made more sense actually to split these based on actual

Sale_Price. One of the chief reasons is that in future posts I view these same charts and by splitting it across predictions the groups are based on the predictions and intervals rather than on the actual values of the data. This means that it is possible that if the predictions change the groups change – hence future posts tests / comparisons may not be perfectly comparable.↩︎Mostly equal – there are 146 or 147 observations summarized in each row.↩︎

Not shown here, but it may also be valuable to review your coverage levels across key variables of interest.↩︎

More explicitly, this test shows that the distribution of

price_grouped by quintileacrosscoveredwould only occur ~3.4% of the time under the assumption of “no relationship”. This is very unlikely, so we reject the NULL hypothesis of “no relationship”.↩︎Alternatively could just have evaluated the interval widths in the

log(Sale_Price, 10)terms outputted by the model.↩︎While the seesaw analogy does make a difference in causing some observations to have wider intervals than others, in aggregate you can see the difference is not that big – values like the Mean Squared Error (MSE) or similar aggregate error metrics actually give a pretty close semblance of what you will expect to get when going through the process of creating prediction intervals specific to each observation. Note also however that the difference in interval width will still be greater for the more extreme observations. In future posts some of the methods I walk through will be more flexible or have other advantages (e.g. less strict reliance on distributional assumptions).↩︎

Along with rethinking which supports the “Statistical Rethinking” book and course – also see study guide↩︎

PyMC3 is built on Theano. If you are wondering why this is not being updated to rely on tensorflow (as many other tools based on theano have been), read The Future of PyMC3, or: Theano is Dead, Long Live Theano.↩︎

See video explaining how it uses Bayesian methods to provide uncertainty for parameter estimates in tensorflow).↩︎

Though linear models are less likely to overfit compared to most model types.↩︎